Click Here for

SDN Essentials - Part 1Click Here for

SDN Essentials - Part 2Click Here for

SDN Essentials - Part 3

What is an OpenFlow Controller?

What is an OpenFlow Controller?

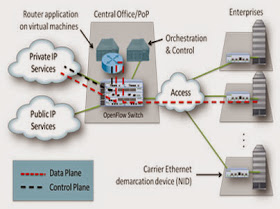

An OpenFlow Controller is a type of SDN Controller that uses the OpenFlow Protocol. An SDN Controller is the strategic point in software-defined network (SDN). An OpenFlow Controller uses the OpenFlow protocol to connect and configure the network devices (routers, switches, etc.) to determine the best path for application traffic. There are also other SDN protocols that a Controller can use such as OpFlex, Yang, and NetConf, to name a few.

SDN Controllers can simplify network management, handling all communications between applications and devices to effectively manage and modify network flows to meet changing needs. When the network control plane is implemented in software, rather than firmware, administrators can manage network traffic more dynamically and at a more granular level. An SDN Controller relays information to the switches/routers ‘below’ (via southbound APIs) and the applications and business logic ‘above’ (via northbound APIs).

In particular, OpenFlow Controllers create a central control point to oversee a variety of OpenFlow-enabled network components. The OpenFlow protocol is designed to increase flexibility by eliminating proprietary protocols from hardware vendors.

OpenFlow Controller Protocol

SDN and OpenFlow Use Cases

When choosing an SDN Controller, IT organizations should evaluate the OpenFlow functionality supported by the Controller, as well as the vendor roadmap. IT organizations should understand existing functionality and ensure newer versions of OpenFlow and optional features are supported (for example, IPv6 support is not part of the OpenFlow v1.0 Standard, however, it is part of the v1.3 Standard).

A sampling of OpenFlow Controllers include:

- Reference Learning Switch Controller: This Controller comes with the Reference Linux distribution, and can be configured to act as a hub or as a flow-based learning switch. It is written in C.

- NOX: NOX is a Network Operating System that provides control and visibility into a network of OpenFlow switches. It supports concurrent applications written in Python and C++, and it includes a number of sample controller applications.

- Beacon: Beacon is an extensible Java-based OpenFlow Controller. It was built on an OSGI framework, allowing OpenFlow applications to be built on the platform to be started/stopped/refreshed/installed at run-time, without disconnecting switches.

- Helios: Helios is an extensible C-based OpenFlow Controller built by NEC, targeting researchers. It also provides a programmatic shell for performing integrated experiments.

- Programmable Flow: Programmable Flow from NEC automates and simplifies network administration for better business agility, and provides a network-wide programmable interface to unify deployment and management of network services with the rest of IT infrastructure. Programmable Flow supports both OpenFlow 1.3 and 1.0, and was the first to be certified by the Open Networking Foundation.

- Vyatta: The Brocade Vyatta Controller is based on OpenDaylight’s Helium release, announced in September 2014. It incorporates OpenStack orchestration, and while it is open sourced, Brocade will offer a commercial version.

- BigSwitch: BigSwitch released a closed-source Controller based on Beacon that targets production enterprise networks. It features an user-friendly CLI for centrally managing your network.

- SNAC: SNAC is a Controller targeting production enterprise networks. It is based on NOX0.4, and features a flexible policy definition language and a user-friendly interface to configure devices and monitor events.

- Maestro: Maestro is an extensible Java-based OpenFlow Controller released by Rice University. It has support for multi-threading and targets researchers.

OpenFlow is currently being driven by the Open Networking Foundation (ONF).

What is an OpenDaylight Controller?

What is an OpenDaylight Controller?

OpenDaylight is an open source project aimed at enhancing software-defined networking (SDN) by offering a community-led and industry-supported framework. It is open to anyone, including end users and customers, and it provides a shared platform for those with SDN goals to work together to find new solutions.

Under the Linux Foundation, OpenDaylight includes support for the OpenFlow protocol, but can also support other SDN standards. It is meant to be configurable in order to support a plethora of SDN interfaces, including, but not limited to, the OpenFlow protocol.

The OpenFlow protocol, considered the first SDN standard, defines the open communications protocol that allows the Controller to work with the forwarding plane and make changes to the network. This gives businesses the ability to better adapt to their changing needs, and have greater control over their networks.

The OpenDaylight Controller is able to deploy in a variety of production network environments. It can support a modular controller framework, but can provide support for other software-defined networking standards and upcoming protocols. The OpenDaylight Controller exposes open northbound APIs, which are used by applications. These applications use the Controller to collect information about the network, run algorithms to conduct analytics, and then use the Controller to create new rules throughout the network.

The OpenDaylight Controller is implemented solely in software, and is kept within its own Java Virtual Machine (JVM). This means it can be deployed on hardware and operating system platforms that support Java. For best results, it is suggested that the OpenDaylight Controller uses a recent Linux distribution and a Java Virtual Machine 1.7.

Overview of OpenDaylight Controller

What is a Floodlight Controller?

What is a Floodlight Controller?

Floodlight is an SDN Controller offered by Big Switch Networks that works with the OpenFlow protocol to orchestrate traffic flows in a software-defined networking (SDN) environment. OpenFlow is one of the first and most widely used SDN standards; it defines the open communications protocol in an SDN environment that allows the Controller (brains of the network) to speak to the forwarding plane (switches, routers, etc.) to make changes to the network.

The SDN controller is responsible for maintaining all of the network rules and providing the necessary instructions to the underlying infrastructure on how traffic should be handled. This enables businesses to better adapt to their changing needs and have better control over their networks.

Released under an Apache 2.0 license, Floodlight can be included in commercial packages. The Apache License is a free software license that allows users to use Floodlight for almost any purpose.

The Floodlight Controller can be advantageous for developers, because it offers them the ability to easily adapt software and develop applications and is written in Java. Included are representational state transfer application program interfaces (REST APIs) that make it easier to program interface with the product, and the Floodlight website offers coding examples that aid developers in building the product.

Tested with both physical and virtual OpenFlow-compatible switches, the Floodlight Controller can work in a variety of environments and can coincide with what businesses already have at their disposal. It can also support networks where groups of OpenFlow-compatible switches are connected through traditional, non-OpenFlow switches.

The Floodlight Controller is compatible with OpenStack, a set of software tools that help build and manage cloud computing platforms for both public and private clouds. Floodlight can be run as the network backend for OpenStack using a Neutron plugin that exposes a networking-as-a-service model with a REST API that Floodlight offers.

How Floodlight Controller works in SDN Environments

What is Ryu Controller?

What is Ryu Controller?

Ryu, Japanese for “flow,” is an open, software-defined networking (SDN) Controller designed to increase the agility of the network by making it easy to manage and adapt how traffic is handled. In general, the SDN Controller is the “brains” of the SDN environment, communicating information “down” to the switches and routers with southbound APIs, and “up” to the applications and business logic with northbound APIs.

The Ryu Controller provides software components, with well-defined application program interfaces (APIs), that make it easy for developers to create new network management and control applications. This component approach helps organizations customize deployments to meet their specific needs; developers can quickly and easily modify existing components or implement their own to ensure the underlying network can meet the changing demands of their applications.

How a Ryu Controller Fits in SDN Environments

The Ryu Controller source code is hosted on GitHub and managed and maintained by the open Ryu community. OpenStack, which runs an open collaboration focused on developing a cloud operating system that can control the compute, storage and networking resources of an organization, supports deployments of Ryu as the network Controller.

Written entirely in Python, all of Ryu’s code is available under the Apache 2.0 license and open for anyone to use. The Ryu Controller supports Netconf and OF-config network management protocols, as well as OpenFlow, which is one of the first and most widely deployed SDN communications standards.

The Ryu Controller can use OpenFlow to interact with the forwarding plane (switches and routers) to modify how the network will handle traffic flows. It has been tested and certified to work with a number of OpenFlow switches, including OpenvSwitch and offerings from Centec, Hewlett Packard, IBM, and NEC.

What is a Cisco XNC (Extensible Network Controller)?

What is a Cisco XNC (Extensible Network Controller)?

In order to keep up with the changing software-defined networking (SDN) environments, Cisco XNC (Extensible Network Controller) was created; it’s support of OpenFlow, the most widely used SDN communications standard, helps it integrate into varied SDN deployments to enable organizations to better control and scale their networks.

As an SDN Controller, which is the “brains” of the network, Cisco XNC uses OpenFlow to communicate information “down” to the forwarding plane (switches and routers), with southbound APIs, and “up” to the applications and business logic, with northbound APIs. It enables organizations to deploy and even develop a variety of network services, using representational state transfer (REST) application program interfaces (API), as well as Java APIs.

The XNC is Cisco’s implementation of the OpenDaylight stack. Cisco is a contributor to the OpenDaylight initiative, which is focused on developing open standards for SDN that promote innovation and interoperability. Cisco XNC is designed to deliver the cutting edge OpenDaylight technologies as commercial, enterprise-ready solutions.

As a result, Cisco XNC provides functionality required for production environments, such as:

- Monitoring, topology-independent forwarding (TIF), high availability and network slicing applications

- Advanced troubleshooting and debugging capabilities

- Support for the Cisco Open Network Environment (ONE) Platform Kit (onePK), in addition to its OpenFlow support

Cisco XNC can run on a virtual machine (VM) or on a bare-metal service, and can be used to manage any third-party switches, as long as they support OpenFlow. It uses the Open Services Gateway Initiative (OSGi) framework, which offers the modular and extensibility needs that business-critical application require.

What is the Brocade Vyatta SDN Controller?

What is the Brocade Vyatta SDN Controller?

An SDN Controller in a software-defined network (SDN) is the “brains” of the network. It is the strategic control point in the SDN network, relaying information to the switches/routers ‘below’ (via southbound APIs) and the applications and business logic ‘above’ (via northbound APIs). Recently, as organizations deploy more SDN networks, the Controllers have been tasked with federating between SDN Controller domains, using common application interfaces, such as the open virtual switch database (OVSDB).

The OpenDaylight Project, announced in April 2014 and hosted by the Linux Foundation, was created in order to advance SDN and NFV adoption. It was created as a community-led and industry-supported open source framework. The aim of the OpenDaylight Project is to offer a functional SDN platform that gives users directly deployed SDN without the need for other components. In addition to this, contributors and vendors can deliver add-ons and other pieces that will offer more value to OpenDaylight.

Brocade, as a charter member of the OpenDaylight Project, announced its own OpenDaylight-based SDN Controller in September 2014. The Brocade Vyatta Controller was in development for five months prior to its launch, and will be based on the OpenDaylight Project’s Helium code release, due out this fall. General availability for the Vyatta Controller is on track for November 2014.

OpenDaylight Controller Architecture

Vyatta Controller Features

The Vyatta Controller, the first commercial Controller built directly from OpenDaylight code, allows users to freely optimize their network infrastructure to address the needs of their specific workloads. Features of the Vyatta Controller include:

- A common SDN domain for multi-vendor networks and virtual machines (VM)

- Single-source technical support for Brocade SDN Controller domains

- Easy-to-use installation tools and developer support

- Pre-tested packages and services optimized for the different needs of service providers and traditional network operations

- Flexibility for OpenDaylight applications developed on the Vyatta Controller

The Vyatta Controller is part of Brocade’s “new IP” vision and will incorporate OpenStack orchestration, OpenDaylight-based control, as well as virtual and physical network gear. The Vyatta Controller is open source, but Brocade will offer a commercial version.

Brocade will also offer applications for the Vyatta Controller. The first two will be sold separately from the Controller:

- The Path Explorer: calculates optimal paths through the network; available with the first release of the Vyatta Controller

- Volumetric Traffic Management: recognizes elephant flows and volume-based attacks; slated for early 2015

What is VMware NSX?

What is VMware NSX?

VMware NSX is the network virtualization and security platform that emerged from VMware after they acquired Nicira in 2012. The solution de-couples the network functions from the physical devices, in a way that is analogous to de-coupling virtual servers (VMs) from physical servers. In order to de-couple the new virtual network from the traditional physical network, NSX natively re-creates the traditional network constructs in virtual space — these constructs include ports, switches, routers, firewalls, etc. In the past, everyone knew what these things were. It was possible to see and touch the switch port that a server connects to, but now, this isn’t possible. Fundamentally, these constructs still exist with VMware NSX, but it is no longer possible to touch them. It is this reason, the virtual network is sometimes harder to conceptualize.

There are two different product editions of NSX – NSX for vSphere and NSX for Multi-Hypervisor (MH). It’s speculated they will merge down the road, but for many possible, or soon to be, users of NSX, it doesn’t matter. Here’s why – they are used to support different use cases. NSX for vSphere is ideal for VMware environments, while NSX for MH is designed to integrate into cloud environments that leverage open standards, such as OpenStack.

NSX for vSphere

The most talked about and documented version of VMware NSX is purpose built for vSphere environments, otherwise referred to as NSX for vSphere. NSX for vSphere will be deployed 90% of the time, as it has native integration to other VMware platforms, such as vCenter and vCloud for Automation Center (vCAC). NSX for vSphere offers logical switching, in-kernel routing, in-kernel distributed firewalling, and edge-border L4-7 devices that offer VPN, load balancing, dynamic routing, and FW capabilities.

It is the culmination of the original networking solution from VMware, vCloud Networking and Security (vCNS), and the Network Virtualization Platform (NVP) from Nicira. In addition, NSX acts as a platform and integrates with third parties, such as Palo Alto Networks and F5.

NSX for MH

The second edition of VMware NSX is the next-generation NVP product that initially emerged out of Nicira. NSX for MV has no native integration with vCenter because it was purpose-built from the ground up to support any cloud environments, such as OpenStack and CloudStack. As an example, NSX for MH offers native integration into OpenStack, by supporting the OpenStack Neutron APIs. This means OpenStack could be deployed as the cloud management platform (CMP), but NSX will take responsibility for creating and configuring logical ports, logical switches, logical routers, security groups, and other networking services.

While there isn’t native integration with vCenter, it does still, in fact, support vSphere, KVM, and XEN hypervisors, though it contains less features than NSX for vSphere, from a networking perspective. There isn’t so-called native integration because a user would not be configuring NSX-MH through a GUI. It’s meant to be API-driven from a cloud platform.

What is Open vSwitch?

What is Open vSwitch?

In order to define what Open vSwitch (OVS) is, it’s extremely important to first understand virtual switching and the new access layer. In the past, servers would physically connect to a hardware-based switch located in the data center. When VMware created server virtualization the access layer changed from having to be connected to a physical switch to being able to connect to a virtual switch. This virtual switch is a software layer that resides in a server that is hosting virtual machines (VMs). VMs, and now also containers, such as Docker, have logical or virtual Ethernet ports. These logical ports connect to a virtual switch.

There are three popular virtual switches – VMware virtual switch (standard & distributed), Cisco Nexus 1000V, and Open vSwitch (OVS).

OVS was created by the team at Nicira, that was later acquired by VMware. OVS was created to meet the needs of the open source community, since there was no a feature-rich virtual switch offering designed for Linux-based hypervisors, such as KVM and XEN. OVS has quickly become the defacto virtual switch for XEN environments and it is now playing a large part in other open source projects, like OpenStack.

OVS supports NetFlow, sFlow, port mirroring, VLANs, LACP, etc. From a control and management perspective, OVS leverages OpenFlow and the Open vSwitch Database (OVSDB) management protocol, which means it can operate both as a soft switch running within the hypervisor, and as the control stack for switching silicon.

OVS differs from the commercial offerings from VMware and Cisco. One point worth noting about OVS is that there is not a native Controller or manager, like the Virtual Supervisor Manager (VSM) in the Cisco 1000V or vCenter in the case of VMware’s distributed switch. Open vSwitch is meant to be controlled and managed by 3rd party controllers and managers. For example, it can be driven by using an OpenStack plug-in or directly from an SDN controller, such as OpenDaylight. This doesn’t mean a Controller is necessary; it is possible to deploy OVS on all servers in an environment and let them operate with traditional MAC learning functionality.

What is OVSDB?

What is OVSDB?

Simply put, Open vSwitch Database (OVSDB) is a management protocol in a software defined networking (SDN) environment. OVSDB was created by the Nicira team that was later acquired by VMware. OVSDB was part of Open vSwitch (OVS), which is a feature-rich, open source virtual switch designed for Linux-based hypervisors. Most network devices allow for remote configuration using legacy protocols, such as simple network management protocol (SNMP). The focus with OVS was to create a modern, programmatic management protocol interface – OVSDB was the answer.

OVSDB Diagram

While it’s sometimes assumed OpenFlow can do it all, this is not the case. For SDN Controller deployments with OVS, OpenFlow is still used to program flow entries, but OVSDB is used to configure the OVS, itself. Configuring OVS means doing things like creating/deleting/modifying bridges, ports, and interfaces. If OVS is deployed in a standalone environment, there is no reason OVSDB can’t be used by itself to configure OVS (non-OpenFlow environment). While this is possible, very few standalone network management platforms really exist that support OVS or specifically, native OVSDB. Note: Some management platforms may be using scripting with bash/python.

While OVSDB was introduced to the world, along with OVS, the Open vSwitch Database is now being supported by more switch platforms, other than OVS. OVSDB is now being supported by network vendors, such as Cumulus, Arista, and Dell, just to name a few. By supporting the Open vSwitch Database, these vendors are integrating their hardware platforms with SDN and network virtualization solutions.

What is OpenStack Networking?

What is OpenStack Networking?

OpenStack is an open source, community driven, cloud management platform. OpenStack is sometimes referred to as a cloud operating system. In reality, it’s a collection of projects and APIs that can be implemented with open source or commercial technologies. OpenStack Networking, otherwise referred to as Neutron, is one of many core projects within OpenStack. For example, another core and popular project is Nova, or OpenStack Compute. Prior to being called Neutron, OpenStack Networking was called Quantum, but because of naming rights, the OpenStack Foundation was forced to change the name. If we go back a little further, networking was actually part of the Nova project and was called Nova-networking.

There was a lack of flexibility and features in Nova networking, resulting in OpenStack Networking becoming a standalone project. OpenStack Networking, or Neutron, provides APIs for network constructs, such as port, interface, switch, router, floating IPs, security groups, etc. The APIs exposed by Neutron can almost be thought of as a software-defined networking (SDN) northbound API, but in reality, Neutron has nothing to do with SDN.

How OpenStack Networking is Managed

As long as the networking technology has a plug-in for OpenStack Neutron, it can smoothly integrate with OpenStack. This means it is up to the implementer to determine how the network constructs are actually deployed – a port, interface, switch, or router can be either physical or virtual, it can be part of a SDN or a traditional network environment; it doesn’t matter. The only requirement is the vendor (or open source technology) supports the Neutron APIs. For example, Big Switch, VMware, Cisco, Nuage, PLUMgrid, Arista, Juniper, Brocade, and many more support OpenStack Networking. Arista will integrate natively and configure hardware switches to support OpenStack, but VMware will integrate natively and configure virtual switches to tie back into OpenStack.

From an end user’s perspective, this is a huge win. This means that as an end user, you should be able to replace one vendor with another quite easily in an OpenStack environment. In theory, this is true, but many vendors are supporting proprietary extensions with their Neutron integration efforts, potentially making them hard to swap out. This is something to consider as an end user, especially if you are thinking about deploying a commercial offering integrated with OpenStack.

What Is Cisco ACI?

What Is Cisco ACI?

Cisco ACI (Application Centric Infrastructure) is the solution that emerged from Cisco, following its acquisition of Insieme, which is a company they funded for more than two years. ACI is seen by many as Cisco’s software-defined networking (SDN) offering for data center and cloud networks.

How Cisco ACI Works

Cisco ACI is a tightly coupled policy-driven solution that integrates software and hardware. The hardware for Cisco ACI is based on the Cisco Nexus 9000 family of switches. The software and integration points for ACI include a few components, including Additional Data Center Pod, Data Center Policy Engine, and Non-Directly Attached Virtual and Physical Leaf Switches. While there isn’t an explicit reliance on any specific virtual switch, at this point, policies can only be pushed down to the virtual switches if Cisco’s Application Virtual Switch (AVS) is used, though there has been talk about extending this to Open vSwitch in the near future.

To a large extent, the network for Cisco ACI is no different than what has been deployed over the past several years in enterprise data centers. What is different, however, is the management and policy framework, along with the protocols used in the underlying fabric.

In a leaf-spine ACI fabric, Cisco is provisioning a native Layer 3 IP fabric that supports equal-cost multi-path (ECMP) routing between any two endpoints in the network, but uses overlay protocols, such as virtual extensible local area network (VXLAN) under the covers to allow any workload to exist anywhere in the network. Supporting overlay protocols is what will give the fabric the ability to have machines, either physical or virtual, in the same logical network (Layer 2 domain), even while running Layer 3 routing down to the top of each rack. Cisco ACI supports VLAN, VXLAN, and network virtualization using generic routing encapsulation (NV-GRE), which can be combined and bridged together to create a logical network/domain as needed.

From a management perspective, it is the central Controller of the ACI solution, the Application Policy Infrastructure Controller (APIC) that will manage and configure the policy on each of the switches in the ACI fabric. Hardware becomes stateless with Cisco ACI, much like it is with Cisco’s UCS Computing Platform. This means no configuration is tied to the device. The APIC acts as a central repository for all policies and has the ability to rapidly deploy and re-deploy hardware, as needed, by using this stateless computing model.

Cisco ACI also serves as a platform for other services that are required within the data center or cloud environment. Through the use of the APIC, 3rd party services can be integrated for advanced security, load balancing, and monitoring. Vendors and products, such as SourceFire, Embrane, F5, Cisco ASA, and Citrix can integrate natively into the ACI fabric and be part of the policy defined by the admin. Through the use of northbound APIs on the APIC, ACI can also integrate with different types of cloud environments.

How Cisco ACI Integrates with Other Products

What is Cisco Application Policy Infrastructure Controller (APIC)?

What is Cisco Application Policy Infrastructure Controller (APIC)?

The Cisco Application Policy Infrastructure Controller (APIC) is the single point of policy and management of a Cisco Application Centric Infrastructure (ACI) fabric. Cisco APIC re-defines how Cisco networks are managed and operated. In traditional Cisco networks, each node is managed independently, via the command-line interface (CLI), which is time consuming, tedious, and error prone. In ACI networks, network admins use the APIC to manage the network – they no longer need to access the CLI on every node to configure or provision network resources.

Cisco APIC differs from more traditional software-defined networking (SDN) Controllers and designs, in that there is zero de-coupling of the control plane from the data plane. Cisco APIC is only used to configure the policy; the policy is then delivered and instantiated on each of the nodes in the network. This allows the Cisco APIC to implement higher orders of logic to better integrate with the consumers of the network – the systems and application teams.

A common example is deploying a 3-tier application. In order to have done this in the past, administrators needed to know the VLANs, IP ranges, FW policy, Load balancing policy, etc. They are all network centric terms that the consumers of the network didn’t know. The value in Cisco ACI is changing this paradigm and becoming more application centric, as opposed to network centric. There will be low level parameters that need to be configured by a network admin, but these will be hidden and abstracted away for the server and application administrators.

With Cisco ACI, endpoint groups (EPGs) are created that may be “web,” “app,” and “db.” Contracts are created between EPGs to implement the desired functionality once, i.e. QOS, FW, LB, etc. As the business demands and more hosts and VMs are required, all that has to happen is the new machine be placed in the proper EPG. Every other change happens dynamically. One of the values of EPGs here is that they are not, or do not have to be, based on traditional network constructs, like IP subnets or VLANs.

Industry shifts are redefining IT at every level, creating need for application agility to enable businesses to address changes quickly. Traditional methods use a silo’d operational stance, with no common operational model for applications, networks, security, and cloud teams. A common operational model offers simpler operations, better performance, and scalability.

To address these needs, Cisco introduced its Application Centric Infrastructure (ACI). It resides in the data center and is built with centralized automation and policy-driven application profiles. Cisco positions ACI as offering the flexibility of software with the scalability of hardware performance.

Along with the Cisco Nexus 9000 Series Switches and the Cisco Application Virtual Switch (AVS), a major component of Cisco ACI is the Cisco Application Policy Infrastructure Controller (APIC). APIC is the single point of automation and management in both physical and virtual environments, allowing operators to build fully automated and multitenant networks with scalability. The main function of Cisco APIC is to offer policy authority and resolution methods for the Cisco ACI, as well as devices attached to Cisco ACI.

Cisco APIC Features

- The capability to build and enforce application-centric network policies.

- An open standards framework, with the support of northbound and southbound application program interfaces (APIs).

- Integration of third-party Layer 4-7 services, virtualization, and management.

- Scalable security for multitenant environments.

- A common policy platform for physical, virtual, and cloud computing

The Cisco APIC uses Cisco OpFlex, a southbound protocol in software-defined networking (SDN), to enable policies to be applied across physical and virtual switches.

The OpFlex approach differs from the OpenFlow communications protocol, which is one of the first and most widely deployed SDN standards, in that it focuses mainly on ensuring consistent policy enforcement across the underlying infrastructure. While OpFlex centralizes policies, OpenFlow looks to centralize all functions on the SDN Controller. OpFlex creators believe this shift will allow the Controller to offer greater resiliency, availability, and scalability, by moving some of the intelligence to hardware devices, using established network protocols.

With the Cisco APIC, northbound APIs allow for swift integration with existing management and orchestration frameworks. It is also compatible with OpenStack, which is developing an open cloud operating system to control the compute, storage and networking resources across the organization. This provides consistency across physical, virtual, and cloud environments when using the Cisco ACI policy. Southbound APIs enable users to extend the Cisco ACI policies to existing virtualization and Layer 4-7 services, as well as networking components.