Pulling the telecom-to-datacenter California alley-oop

Chinese telecom giant and increasingly important server player Huawei Technologies is moving from racks and blades into modular designs that use a mix of both approaches – and look very much like modular kit from Cisco Systems, IBM, and Hitachi, as well as the newer bladish iron from HP and Dell.

The likeness between the forthcoming Huawei servers and IBM and Hitachi machines announced back in April is enough to make you wonder if Huawei is actually manufacturing those companies' respective Flex System and Compute Blade 500 machines.

Huawei isn't – as far as we know – but as El Reg pointed out when Hitachi announced the CB500 machines, it sure does look like IBM and Hitachi are tag-teaming on manufacturing for modular systems. Possibly by using the same ODM to bend the metal and make the server node enclosures, perhaps?

The distinction between a blade and a modular system is a subtle one. With modular systems, server nodes are oriented horizontally in the chassis and are taller than a typical vertical blade is wide, allowing for hotter and taller processors as well as taller memory and peripheral cards than you can typically put in a skinny blade server.

The modular nodes can be half-width or full-width in the chassis and offer the same or slightly better compute density as a blade server in a similar-sized rack enclosure, and because of the extra room in the node, can accommodate GPU or x86 coprocessors as well. They are made for peripheral expansion and maximizing airflow around the nodes.

Modular systems generally have converged Ethernet networks for server and storage traffic, but also support an InfiniBand alternative to Ethernet for server networks and Fibre Channel for storage networks, just as do blade servers. Modular systems also tend to have integrated systems management that spans multiple compute node enclosures and are geared for virtualized server clouds. It's not a huge difference, when you get right down to it.

What is most important about modular systems, in this evolving definition, is that they look like – and compete with – the "California" Unified Computing System machines that Cisco put into the field three years ago when it broke into the server racket.

Cisco's business has been nearly doubling for the past two years and is bucking the slowdown big-time in serverland. Cisco is defining the look of the modern blade server and eating market share. Huawei wants to pull the same California maneuver, peddling its own servers to its installed base of networking and telecom gear customers and driving out the server incumbents.

Huawei lifted the veil on the Tecal E9000 modular machines at the Huawei Cloud Congress show recently in Shanghai, and says that the boxes won't actually ship until the first quarter of next year – Huawei is clearly not in any kind of a big hurry to get its Cisco-alike boxes out the door.

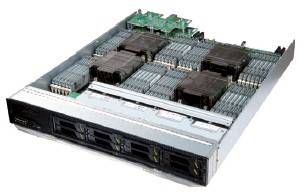

The Tecal E9000 modular system from Huawei

The Tecal E9000 is based on a 12U chassis that can support either eight full-width nodes or sixteen half-width nodes. The chassis has 95 per cent efficient power supplies, and a total of six supplies can go into the enclosure with redundant spares, rated at 3,000 watts a pop AC and 2,500 watts a pop DC.

The chassis and server nodes have enough airflow that they can operate at 40°C (104°F) without additional water blocks or other cooling mechanisms on the chassis or the rack. This is the big difference with modular designs, and one that was not possible with traditional blades. Blade enclosures ran hot because they were the wrong shape, and the fact that by simply reorienting the parts you can get the machines to have the same computing capacity in the same form factor just goes to show you that the world still need engineers.

The Tecal E9000 server nodes are all based on Intel's Xeon E5-2600 or E5-4600 processors, which span two or four processor sockets in a single system image, respectively. There are a couple server node variants to give customers flexibility on memory and peripheral expansion. The nodes and the chassis are NEBS Level 3 certified (which means they can be deployed in telco networks) and also meet the European Telecommunications Standards Institute's acoustic noise standards (which means workers won't go deaf working on switching gear).

The Tecal CH121 server node

The CH121 is a single-width server node with two sockets that can be plugged with any of the Xeon E5-2600 series processors, whether they have four, six, or eight cores per socket. Each socket has a dozen DDR3 memory slots for a maximum capacity of 768GB across the two sockets using fat (and crazy expensive) 32GB memory sticks.

The node has two 2.5-inch disk bays, which can be jammed with SATA or SAS disk drives or solid state disks if you want lots of local I/O bandwidth but not as much capacity for storage on the nodes. The on-node disk controller supports RAID 0, 1, and 10 data protection on the pair of drives.

The CH121 machine has one full-height-half-length PCI-Express 3.0 x16 expansion card and two PCI-Express 3.0 x16 mezzanine cards that plug the server node into the midplane and then out to either top-of-rack switches through a pass-through module or to integrated switches in the E9000 enclosure.

The CH221 takes the same server and makes it a double-wide node, which gives it enough room to add six PCI-Express peripheral slots. That's two x16 slots in full-height, full-length form factors plus four x8 slots with full-height, half-length dimensions.

The double-wide Tecal CH221 server node

A modified version of this node, called the CH222, uses the extra node's worth of space for disk storage instead of PCI-Express peripherals. The node has room for the same two front-plugged 2.5-inch drives plus another thirteen 2.5-inch bays for SAS or SATA disks or solid state drives if you want to get all flashy. These hang off the two E5-2600 processors, and the node is upgraded with a RAID disk controller that has 512MB of cache memory and supports RAID 0, 1, 10, 5, 50, 6, and 60 protection algorithms across the drives. This units steps back to one PCI-Express x16 slot and two x16 mezz cards into the backplane.

If you want more processing to be aggregated together in an SMP node, then Huawei is happy to sell you the CH240 node, a four-socket box based on the Xeon E5-4600. Like other machines in this class from other vendors, the CH240 has 48 memory slots, and that taps out at 1.5TB of memory using those fat 32GB memory sticks. The CH240 supports all of the different SKUs of Intel's Xeon E5-4600 chips, which includes processors with four, six, or eight cores.

The Tecal CH240 four-socketeer

The CH240 does not double-up on the system I/O even as it does double-up the processing and memory capacity compared to the CH221. It has the two PCI-Express x16 mezzanine cards to link into the midplane and then out to switches, but no other peripheral expansion beyond that in the base configuration.

This is a compute engine in and of itself, designed predominantly as a database, email, or server virtualization monster. It supports the same RAID disk controller used in the CH221, but because of all that memory crammed into the server node, there's only enough room for eight 2.5-inch bays for disks or SSDs in the front. If you want to sacrifice some local storage, you can put in a PCI-Express riser card, which lets you put one full-height, 3/4ths length x16 peripheral card into the CH240.

All of the machines are currently certified to run Windows Server 2008 R2, Red Hat Enterprise Linux 6, and SUSE Linux Enterprise Server 11, and presumably will be ready to run the new Windows Server 2012 when they start shipping early next year.

VMware's ESXi 5.X hypervisor and Citrix Systems' XenServer 6 hypervisor as well, and again, presumably Hyper-V 3.0 will get certified on the box at some point and maybe even Red Hat's KVM hypervisor as well. There is no technical reason to believe that the server nodes can't run any modern release of any of the popular x86 hypervisors, but there's always a question of driver testing and certification.

The CX series of switch modules for the E9000 enclosure

On the switch front, Huawei is sticking with three different switch modules, which slide into the back of the E9000 chassis and provide networking to the outside world. The CX110, on the right in the above image, has 32 Gigabit Ethernet ports downstream into the server midplane and out to the PCI-Express mezz cards, which is two per node. The CX110 switch module has a dozen Gigabit and four 10GbE uplinks to talk to aggregation switches in the network.

The CX311 switch module takes the networking up another notch, with 32 10GbE downstream ports and sixteen 10GbE uplinks. This switch also has an expansion slot that can have an additional eight 10GbE ports or eight 8Gb/sec Fibre Channel switch ports linking out to storage arrays.

Huawei also has a QDR/FDR InfiniBand switch model with sixteen downstream ports and eighteen upstream ports, which can run at either 40Gb/sec or 56Gb/sec speeds.

The current midplane in the E9000 chassis is rated at 5.6Tbit/sec of aggregate switching bandwidth across its four networking switch slots, which can be used to drive Ethernet or InfiniBand traffic (depending on the switch module you choose).

Here's the important thing: the Tecal E9000 midplane will have an upgrade option that will allow it to push that enclosure midplane bandwidth up to 14.4Tb/sec, allowing it to push Ethernet at 40 and 100 Gigabit speeds and next-generation InfiniBand EDR, which will run at 100Gb/sec; 16Gb/sec and 32Gb/sec Fibre Channel will also be supported after the midplane is upgraded. It is not clear when this upgraded midplane will debut.

Pricing on all of this Tecal E9000 gear has not been set yet, according to Huawei. ®

The launch of CSA’s CCSK program is an important step in improving security professionals’ understanding of cloud security challenges and best practices and will lead to improved trust of and increased use of cloud services.

The launch of CSA’s CCSK program is an important step in improving security professionals’ understanding of cloud security challenges and best practices and will lead to improved trust of and increased use of cloud services. The Cloud Security Alliance’s Certificate of Cloud Security Knowledge (CCSK) is the industry’s first user certification program for secure cloud computing.

The Cloud Security Alliance’s Certificate of Cloud Security Knowledge (CCSK) is the industry’s first user certification program for secure cloud computing. EXIN has one cloud certification in the portfolio. This cloud certification is suited for a management level, but also engineers find this valuable. The EXIN certification was built by specialists from 4 companies.

EXIN has one cloud certification in the portfolio. This cloud certification is suited for a management level, but also engineers find this valuable. The EXIN certification was built by specialists from 4 companies. HP at this moment, has two certifications for people who are familiar with HP cloud technologies.

HP at this moment, has two certifications for people who are familiar with HP cloud technologies. IBM has three certification for people who want to demonstrate their knowledge with the Cloud Computing infrastructure solutions.

IBM has three certification for people who want to demonstrate their knowledge with the Cloud Computing infrastructure solutions. CloudU is a cloud certification designed for IT professionals and business leaders who want to upgrade their knowledge of the fundamentals of Cloud Computing. CloudU program is sponsored by RackSpace Hosting.

CloudU is a cloud certification designed for IT professionals and business leaders who want to upgrade their knowledge of the fundamentals of Cloud Computing. CloudU program is sponsored by RackSpace Hosting. Salesforce is a cloud computing company. All certifications from the Salesforce portfolio are based on their cloud services.

Salesforce is a cloud computing company. All certifications from the Salesforce portfolio are based on their cloud services.