What You Will Learn

The Cisco® Global Cloud Index is an ongoing effort to forecast the growth of global data center and cloud-based IP traffic. The forecast includes trends associated with data center virtualization and cloud computing. This document presents the details of the study and the methodology behind it.

Forecast Overview

Global data center traffic:

• Annual global data center IP traffic will reach 4.8 zettabytes by the end of 2015. In 2015, global data center IP traffic will reach 402 exabytes per month.

• Global data center IP traffic will increase fourfold over the next 5 years. Overall, data center IP traffic will grow at a compound annual growth rate (CAGR) of 33 percent from 2010 to 2015.

Data center virtualization and cloud computing transition:

• The number of workloads per installed traditional server will increase from 1.4 in 2010 to 2.0 in 2015.

• The number of workloads per installed cloud server will increase from 3.5 in 2010 to 7.8 in 2015.

• By 2014, more than 50 percent of all workloads will be processed in the cloud.

Global cloud traffic:

• Annual global cloud IP traffic will reach 1.6 zettabytes by the end of 2015. In 2015, global cloud IP traffic will reach 133 exabytes per month.

• Global cloud IP traffic will increase twelvefold over the next 5 years. Overall, cloud IP traffic will grow at a CAGR of 66 percent from 2010 to 2015.

• Global cloud IP traffic will account for more than one-third (34 percent) of total data center traffic by 2015.

Regional cloud readiness:

• North America and Western Europe lead in broadband access (fixed and mobile). Asia Pacific leads in the number of subscribers due to the region's large population.

• Western Europe leads in fixed average download speeds of 12.2 Mbps, followed by Central and Eastern Europe with 9.4 Mbps, making these regions the most cloud ready from a download speed perspective.

• Western Europe and Central and Eastern Europe lead in average fixed upload speeds of 5.9 Mbps and 5.7 Mbps, respectively, making these regions the most cloud ready from an upload speed perspective.

• Western Europe and North America lead in average fixed latencies with 68 ms and 75 ms respectively, making these regions the most cloud ready from a latency perspective.

Evolution of Data Center Traffic

From 2000 to 2008, peer-to-peer file sharing dominated Internet traffic. As a result, the majority of Internet traffic did not touch a data center, but was communicated directly between Internet users. Since 2008, most Internet traffic has originated or terminated in a data center. Data center traffic will continue to dominate Internet traffic for the foreseeable future, but the nature of data center traffic will undergo a fundamental transformation brought about by cloud applications, services, and infrastructure. By 2015, one-third of data center traffic will be cloud traffic.

The following sections summarize not only the volume and growth of traffic entering and exiting the data center, but also the traffic carried between different functional units within the data center.

Global Data Center IP Traffic: Already in the Zettabyte Era

Figure 1 summarizes the forecast for data center IP traffic growth from 2010 to 2015.

Figure 1. Global Data Center IP Traffic Growth

The Internet may not reach the zettabyte era until 2015, but the data center has already entered the zettabyte era. While the amount of traffic crossing the Internet and IP WAN networks is projected to reach nearly 1 zettabyte per year in 2015

, the amount of data center traffic is already over 1 zettabyte per year, and by 2015 will quadruple to reach 4.8 zettabytes per year. This represents a 33 percent CAGR. The higher volume of data center traffic is due to the inclusion of traffic inside the data center. (Typically, definitions of Internet and WAN stop at the boundary of the data center.)

The global data center traffic forecast, a major component of the Global Cloud Index, covers network data centers worldwide operated by service providers as well as private enterprises. Please see Appendix A for details on the methodology of the data center and cloud traffic forecasts.

Data Center Traffic Destinations: Most Traffic Stays Within the Data Center

Consumer and business traffic flowing through data centers can be broadly categorized into three main areas (Figure 2):

• Traffic that remains within the data center

• Traffic that flows from data center to data center

• Traffic that flows from the data center to end users through the Internet or IP WAN

Figure 2. Global Data Center Traffic by Destination

In 2010, 77 percent of traffic remains within the data center, and this will decline only slightly to 76 percent by 2015.

The fact that the majority of traffic remains within the data center can be attributed to several factors:

• Functional separation of application servers and storage, which requires all replication and backup traffic to traverse the data center

• Functional separation of database and application servers, such that traffic is generated whenever an application reads from or writes to a central database

• Parallel processing, which divides tasks into multiple smaller tasks and sends them to multiple servers, contributing to internal data center traffic

The ratio of traffic exiting the data center to traffic remaining within the data center might be expected to increase over time, because video files are bandwidth-heavy and do not require database or processing traffic commensurate with their file size. However, the ongoing virtualization of data centers offsets this trend. Virtualization of storage, for example, increases traffic within the data center because virtualized storage is no longer local to a rack or server. Table 1 provides details for global data center traffic growth rates.

Table 1. Global Datacenter Traffic, 2010-2015

Data Center IP Traffic, 2010-2015

|

|

2010

|

2011

|

2012

|

2013

|

2014

|

2015

|

CAGR

2010-2015

|

By Type (PB per Year)

|

Data Center-to-User

|

179

|

262

|

363

|

489

|

635

|

815

|

36%

|

Data Center-to-Data Center

|

75

|

110

|

150

|

198

|

252

|

322

|

34%

|

Within Data Center

|

887

|

1,279

|

1,727

|

2,261

|

2,857

|

3,618

|

33%

|

By Segment (PB per Year)

|

Consumer

|

865

|

1,304

|

1,815

|

2,429

|

3,111

|

4,021

|

36%

|

Business

|

276

|

347

|

425

|

520

|

633

|

735

|

22%

|

By Type (PB per Year)

|

Cloud Data Center

|

131

|

257

|

466

|

765

|

1,114

|

1,642

|

66%

|

Traditional Data Center

|

1,010

|

1,394

|

1,775

|

2,184

|

2,629

|

3,114

|

25%

|

Total (PB per Year)

|

Total Datacenter Traffic

|

1,141

|

1,651

|

2,240

|

2,949

|

3,744

|

4,756

|

33%

|

Source: Cisco Global Cloud Index, 2011

Definitions

• Data Center-to-User: Traffic that flows from the data center-to-end users through the Internet or IP WAN

• Data Center-to-Data Center: Traffic that flows from data center-to-data center

• Within Data Center: Traffic that remains within the data center

• Consumer: Traffic originating with or destined for personal end-users

• Business: Traffic originating with or destined for business end-users

• Cloud Data Center: Traffic associated with cloud consumer and business applications

• Traditional Data Center: Traffic associated with non-cloud consumer and business applications

Transitioning Workloads to Cloud Data Centers

A workload can be defined as the amount of processing a server undertakes to execute an application and support a number of users interacting with the application. The Global Cloud Index forecasts the transition of workloads from traditional data centers to cloud data centers. The year 2014 is expected to be a pivotal year-when workloads processed in cloud data centers (51 percent) will exceed those processed in traditional data centers (49 percent) for the first time. Continuing that trend, we expect cloud-processed workloads to dominate at 57 percent by 2015 (Figure 3).

Figure 3. Workload Distribution: 2010˗2015

Source: Independent Analyst Shipment Data, Cisco Analysis

Traditionally, one server carried one workload. However, with increasing server computing capacity and virtualization, multiple workloads per physical server are common in cloud architectures. Cloud economics, including server cost, resiliency, scalability, and product lifespan, are promoting migration of workloads across servers, both inside the data center and across data centers (even centers in different geographic areas). Often an end user application can be supported by several workloads distributed across servers. This can generate multiple streams of traffic within and between data centers, in addition to traffic to and from the end user. Table 2 provides details regarding workloads shifting from traditional data centers to cloud data centers.

Table 2. Workload Shift from Traditional Data Center to Cloud Data Center

Global Datacenter Workloads in Millions

|

|

2010

|

2011

|

2012

|

2013

|

2014

|

2015

|

CAGR 2010-2015

|

Traditional Data Center Workloads

|

45.3

|

49.2

|

52.6

|

58.1

|

64.0

|

66.6

|

8%

|

Cloud Data Center Workloads

|

12.2

|

21.0

|

33.2

|

49.3

|

67.3

|

88.3

|

48%

|

Total Data Center Workloads

|

57.5

|

70.2

|

85.8

|

107.4

|

131.2

|

154.8

|

22%

|

|

Cloud Workloads as a Percentage of Total Data Center Workloads

|

21%

|

30%

|

39%

|

46%

|

51%

|

57%

|

|

Traditional Workloads as a Percentage of Total Data Center Workloads

|

79%

|

70%

|

61%

|

54%

|

49%

|

43%

|

|

Global Cloud IP Traffic Growth

Data center traffic on a global scale grows at 33 percent CAGR (Figure 4), but cloud data center traffic grows at a much faster rate of 66 percent CAGR, or twelvefold growth between 2010 and 2015 (Figure 5).

Figure 4. Total Data Center Traffic Growth

Figure 5. Cloud Data Center Traffic Growth

By 2015, more than one-third of all data center traffic will be based in the cloud. The two main causes of this growth are the rapid adoption and migration to a cloud architecture and the ability of cloud data centers to handle significantly higher traffic loads. Cloud data centers support increased virtualization, standardization, automation, and security. These factors lead to increased performance, as well as higher capacity and throughput.

Global Business and Consumer Cloud Growth

For the purposes of this study, the Global Cloud Index characterizes traffic based on services delivered to the end user. Business data centers are typically dedicated to organizational needs and handle traffic for business needs that may adhere to stronger security guidelines (Figure 6). Consumer data centers typically cater to a wider audience and handle traffic for the mass consumer base (Figure 7).

Figure 6. Business Traditional and Cloud Data Centers

Figure 7. Consumer Traditional and Cloud Data Centers

Within the cloud data center traffic forecast, consumer traffic leads with a CAGR of 39 percent. At 14 percent of total cloud traffic in 2010, consumer traffic is forecast to become more than one-third of all cloud traffic in 2015. Business cloud traffic grows at a CAGR of 25 percent, starting with 6 percent of cloud traffic in 2010 and expected to rise to 19 percent in 2015. Table 3 provides details for global cloud traffic growth rates.

Table 3. Global Cloud Traffic, 2010-2015

Cloud IP Traffic, 2010-2015

|

|

2010

|

2011

|

2012

|

2013

|

2014

|

2015

|

CAGR

2010-2015

|

By Segment (PB per Year)

|

Consumer

|

115

|

226

|

413

|

686

|

1,005

|

1,503

|

67%

|

Business

|

16

|

31

|

52

|

79

|

109

|

139

|

53%

|

Total (PB per Year)

|

Total Cloud Traffic

|

131

|

257

|

466

|

765

|

1,114

|

1,642

|

66%

|

Source: Cisco Global Cloud Index

Global Cloud Readiness

The cloud readiness segment of this study offers a regional view of the fundamental requirements for broadband and mobile networks to deliver next-generation cloud services. The enhancements and reliability of these requirements will support the increased adoption of business-grade and consumer-grade cloud computing. For instance, it is important for consumers to be able to download music and videos on the road as well as for business users to have continuous access to videoconferencing and mission-critical customer relationship management (CRM) and enterprise resource planning (ERP) systems. Download and upload speeds as well as latencies are vital measures to assess network capabilities of cloud readiness. Figure 8 provides the sample business and consumer cloud service categories and the corresponding network requirements used for this study. Regional network performance statistics were ranked by their ability to support these three cloud service categories.

Figure 8. Sample Business and Consumer Cloud Service Categories

Over 45 million records from Ookla

and the International Telecommunication Union (ITU) were analyzed from nearly 150 countries around the world. The regional averages of these measures are included in this report. Individual countries may have slightly or significantly higher or lower averages compared to the regional averages for download speed, upload speed, and network latency. For example, while the overall Asia Pacific region is less ready for cloud services compared to other regions because several individual countries contribute lower speeds and higher latencies, individual countries within the region such as South Korea and Japan show significantly higher readiness. Please see Appendix E for further details on outlier or lead countries per region. The cloud readiness characteristics are as follows.

• Broadband ubiquity: This indicator measures fixed and mobile broadband penetration while considering population demographics to understand the pervasiveness and expected connectivity in various regions.

• Download speed: With increased adoption of mobile and fixed bandwidth-intensive applications, end user download speed is an important characteristic. This indicator will continue to be critical for the quality of service delivered to virtual machines, CRM and ERP cloud platforms for businesses, and video download and content retrieval cloud services for consumers.

• Upload speed: With the increased adoption of virtual machines, tablets, and videoconferencing in enterprises as well as by consumers on both fixed and mobile networks, upload speeds are especially critical for delivery of content to the cloud. The importance of upload speeds will continue to increase over time, promoted by the dominance of cloud computing and data center virtualization, the need to transmit many millions of software updates and patches, the distribution of large files in virtual file systems, and the demand for consumer cloud game services and backup storage.

• Network latency: Delays experienced with voice over IP (VoIP), viewing and uploading videos, online banking on mobile broadband, or viewing hospital records in a healthcare setting, are due to high latencies (usually reported in milliseconds). Reducing delay in delivering packets to and from the cloud is crucial to delivering today's advanced services.

Broadband Ubiquity

Figure 9 summarizes broadband penetration by region in 2011. For further details, please refer to Appendix D.

Figure 9. Regional Broadband Ubiquity, 2011

Source: ITU, Informa Media and Telecoms, Cisco Analysis

Download and Upload Speed Overview

In 2011, global average download speeds are 4.9 Mbps, with global average fixed download speeds at 6.7 Mbps and global average mobile download speeds at 3 Mbps. Global average upload speeds are 2.7 Mbps, with global average fixed upload speeds at 3.7 Mbps and global average mobile upload speeds at 1.6 Mbps.

Western Europe leads in overall average fixed and mobile download speeds of 12.5 Mbps, with Central and Eastern Europe next with 9.3 Mbps.

Fixed Download Speeds

For average consumer fixed download speeds (Figure 10), Western Europe leads with 9.4 Mbps and North America follows with 8.4 Mbps. Western Europe averages 16.8 Mbps for fixed business speeds and Central and Eastern Europe averages 11.9 Mbps. For each region's peak download and upload speeds, see Appendix E.

Figure 10. Business and Consumer Fixed Download Speeds by Region

Source: Cisco Analysis of Ookla Speedtest Data, 2011

Mobile Download Speeds

Western Europe leads in overall mobile download speeds of 4.9 Mbps and North America closely follows with 4.6 Mbps, making them the most cloud-ready regions from a download-speed perspective. Central and Eastern Europe lead in business mobile download speeds of 6.1 Mbps, and Western Europe is next with download speeds of 5.8 Mbps (Figure 11). North America leads in average mobile consumer download speeds with 4.6 Mbps and Western Europe is next with 4.5 Mbps. Please refer to Appendix E for further details.

Figure 11. Business and Consumer Mobile Download Speeds by Region

Source: Cisco Analysis of Ookla Speedtest Data, 2011

Fixed Upload Speeds

Global average upload speeds are 2.7 Mbps. Average global fixed upload speeds are 3.7 Mbps. Western Europe leads with an average upload speed of 5.9 Mbps and Central and Eastern Europe follows with 5.7 Mbps, making them the most cloud ready from an upload speed perspective. Average global business fixed upload speeds are 6.5 Mbps. Western Europe leads with 11.2 Mbps and Central Eastern Europe is next with 8 Mbps (Figure 12). Average global consumer upload speeds are 2.1 Mbps, with Central and Eastern Europe leading with 4 Mbps and APAC next with 3.1 Mbps (Figure 13). Please refer to Appendix E for further details.

Figure 12. Business and Consumer Fixed Upload Speeds by Region

Source: Cisco Analysis of Ookla Speedtest Data, 2011

Mobile Upload Speeds

Global average mobile upload speeds are 1.6 Mbps. Average global mobile business upload speeds are higher at 2.7 Mbps, while average consumer mobile upload speeds are 1.1 Mbps. Central and Eastern Europe leads with overall average mobile upload speeds of 2.5 Mbps and Western Europe follows with 2.3 Mbps, making them the most cloud ready regions from an mobile upload speed perspective. Central and Eastern Europe leads in average consumer mobile upload speed of 1.8 Mbps and North America follows with 1.3 Mbps. Central and Eastern Europe leads with business mobile upload speeds of 4.1 Mbps and Western Europe is next with 4 Mbps. Please refer to Appendix E for further details.

Figure 13. Business and Consumer Mobile Upload Speeds by Region

Source: Cisco Analysis of Ookla Speedtest Data, 2011

Network Latency

Overall average fixed and mobile global latency is 201 ms. Global average fixed latency is 125 and average mobile latency is 290. Western Europe leads in fixed latency with 63 ms and North America closely follows with 75 ms, making these two regions the most cloud ready from a fixed latency perspective. Western Europe leads from the mobile latency perspective with 147 ms and Central and Eastern Europe follows with 173 ms, making these two regions the most cloud ready from a mobile latency perspective. Global business latency is 169.7 ms and consumer latency is higher at 217.3 ms. Average global fixed business latency is 112 ms while fixed consumer latency is 132.9 ms.

Figure 14 shows latencies by region. Western Europe leads in fixed business latencies with 61 ms and Central and Europe is next with 63 ms. North America leads in fixed consumer latencies with 63.3 ms and Western Europe is next with 72 ms. Global mobile business average latency is 251 with Central and Eastern Europe experiencing the best latency at 111.3 ms and Western Europe next with 126.7 ms. Global mobile consumer average latency is 307.3 ms, with Western Europe leading with 159 ms and North America next with 173 ms. Please refer to Appendix E for further details.

Figure 14. Business and Consumer Network Latencies by Region

Source: Cisco Analysis of Ookla Speedtest Data, 2011

Application Readiness

As new models of service delivery and cloud-based business and consumer application consumption evolve, the fundamentals of network characteristics are essential. Fixed and mobile broadband penetration, download and upload speeds, and latency are indicators of readiness for delivery to and consumption from the cloud. Furthermore, although speeds and latency are significant to all interested in assessing the quality of broadband services, they are not the only metrics that matter. Understanding basic broadband measures provides insight into which applications are most likely to benefit from faster broadband services for end consumers and business users. With business and consumer applications alike, advancements in video codecs, traffic optimization technologies, and more, in addition to speeds and latencies, will lead to additional mechanisms to isolate speed bottlenecks at different points along the end-to-end paths and lead to other technical measures that will give a better understanding of how to deliver the best quality of experience.

All of the regions have some level of cloud readiness based on their average upload/download speeds and latencies, as shown in Figure 15. Asia Pacific, Western Europe, North America, and Central and Eastern Europe are better prepared for the intermediate cloud applications such as streaming high-definition video. The Middle East and Africa and Latin America can support basic cloud services. None of the regions' current average network performance characteristics can support advanced cloud services today. Most regions have some outlier countries with network performance results that are higher than their region's average cloud readiness metrics. For example, S. Korea and Japan in APAC and Egypt; South Africa and UAE in MEA.

Figure 15. End User Cloud Application Readiness

Conclusion

In conclusion, here's a summary and key takeaways from our first Global Cloud Index.

In terms of data center and cloud traffic, we are firmly in the zettabyte era. Global datacenter traffic will grow four-fold from 2010 to 2015 and reach 4.8 zettabytes annually by 2015. A subset of data center traffic is cloud traffic, which will grow 12-fold over the forecast period and represent over one-third of all data center traffic by 2015.

A key traffic driver as well as an indicator of the transition to cloud computing is increasing data center virtualization. The growing number of end user devices combined with consumer and business users preference or need to stay connected is creating new network requirements. The evolution of cloud services is driven in large part by users' expectations to access applications and content anytime, from anywhere, over any network and with any device. Cloud-based data centers can support more virtual machines and workloads per physical server than traditional datacenters. By 2014, more than 50% of all workloads will be processed in the cloud.

From a cloud readiness perspective, the study covers the importance of broadband ubiquity. Based on the regional average download and upload speeds and latencies for business and consumer connections, all regions can support some level of cloud services. However, few regions' average network characteristics are currently able to support the high-end advanced cloud apps.

For More Information

For more information, please see

www.cisco.com/go/cloudindex.

Appendix A: Data Center Traffic Forecast Methodology

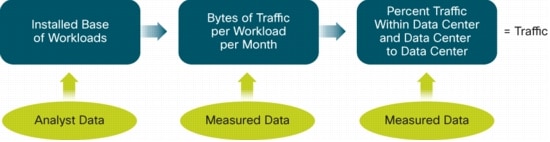

Figure 16 outlines the methodology used to forecast data center and cloud traffic. The methodology begins with the installed base of workloads by workload type and implementation and then applies the volume of bytes per workload per month to obtain the traffic for current and future years.

Figure 16. Data Center Traffic Forecast Methodology

Analyst Data

Data from several analyst firms was used to calculate an installed base of workloads by workload type and implementation (cloud or noncloud). The analyst input consisted of server shipments with specified workload type and implementation. Cisco then estimated the installed base of servers and the number of workloads per server to obtain an installed base of workloads.

Measured Data

Network data was collected from 10 enterprise and Internet centers. The architectures of the data centers analyzed vary, with some having a three-tiered and others a two-tiered data center architecture. For the three-tiered data centers, data was collected from four points: the link from the access routers to the aggregation routers, the link from the aggregation switches or routers to the site or regional backbone router, the WAN gateway, and the Internet gateway. From two-tiered data centers, data was collected from three points: the link from the access routers to the aggregation routers, the WAN gateway, and the Internet gateway.

For enterprise data centers, any traffic measured northbound of the aggregation also carries non-data-center traffic to and from the local business campus. For this reason, in order to obtain ratios of the volume of traffic being carried at each tier, it was necessary to measure the traffic by conversations between hosts rather than traffic between interfaces, so that the non-data-center conversations could be eliminated. The hosts at either end of the conversation were identified and categorized by location and type. To be considered data center traffic, at least one of the conversation pairs had to be identified as appearing in the link between the data center aggregation switch or router and the access switch or router. A total of 50,000 conversations were cataloged, representing a volume of 30 terabytes of traffic for each month that was analyzed. Included in this study were the 12 months ending September 30, 2011.

Appendix B: Mobility and Multiple Device Ownership are Primary Promoters of Cloud Application Adoption

Figure 17 shows the proliferation of multiple device ownership.

Figure 17. Per-User Ownership of Devices Connected to the Internet

Internet users are using multiple devices to connect to the Internet, and these devices are increasingly mobile. It is no longer feasible for these users to manually replicate content and applications to each of their devices. While storing content on a peripheral drive connected to the local home or business network was once an elegant solution, the increasing mobility of Internet devices is making cloud storage a more attractive option.

• Nearly 70 percent of Internet users will use more than five network-connected devices in 2015, up from 36 percent at the end of 2010. These devices include laptops, desktops, smartphones, tablets, Internet-connected televisions, set-top boxes, game consoles, digital photo frames, and other Internet-connected electronics.

• The average business will need to support twice as many end-user devices in 2015 as in 2010, and the diversity of these devices will continue to grow. The days of restricting network access to identical company-issued PCs are soon to pass.

Appendix C: With the Cloud Comes Complexity

Figure 18 illustrates the complexity that accompanies cloud-based computing.

Figure 18. One Video, One User, Seventeen Paths in 2015

With the cloud, users can access their content and applications on many devices. Each of these devices may have the capability to support multiple network connections and multiple displays. Each network connection has particular latency and speed signatures, and each display has its own aspect ratio and resolution. Each cloud application may incorporate multiple content sources and may be linked with a number of other applications. The cloud is a multidimensional environment, and the resulting complexity can be astounding.

Although there may be many ways to measure the complexity of data center operations and how that will change with the advent of cloud applications, a simple complexity gauge can be created by counting the possible combinations of users, devices, displays, connections, and content sources (Table 4).

Table 4. Data Center Complexity

Data Center Complexity Factors

|

2010

|

2015

|

Number of Internet users

|

1.9 Billion

|

3.1 Billion

|

Number of devices per user

|

3.4

|

3.9

|

Number of connection types per device

|

1.3

|

1.4

|

Number of display types per device

|

1.1

|

1.3

|

Number of applications per user

|

2.5

|

3.5

|

Number of content sources per application

|

1.5

|

2.3

|

Possible combinations

|

33 Billion

|

183 Billion

|

By this measure, data center complexity will increase fivefold between 2010 and 2015. Another way to look at these numbers is to limit the scenario to a single piece of content and a single user. In 2005, a single piece of content had an average of three paths to a user: it might have traveled through a mobile connection to a smartphone and been displayed on a mobile screen by a mobile application, or it might have traveled through a fixed connection to a laptop and been displayed on a laptop or large display by a PC application. In 2010, the situation was considerably more complex: a single piece of content had seven possible paths to the user. In 2015, a single piece of content will have 17 possible paths to the user.

Appendix D: Regional Cloud Readiness Summary

Tables 5 and 6 summarize cloud readiness by region.

Table 5. Regional Cloud Readiness

Network

|

Segment

|

Region

|

Average Download (kbps)

|

Average Upload (kbps)

|

Average Latency (ms)

|

Fixed

|

Business

|

APAC

|

9,163

|

7,220

|

103

|

|

|

CEE

|

11,994

|

8,095

|

63

|

|

|

LATAM

|

3,119

|

2,085

|

151

|

|

|

MEA

|

2,354

|

1,396

|

245

|

|

|

NA

|

8,552

|

4,952

|

87

|

|

|

WE

|

16,759

|

11,219

|

61

|

|

Business Average

|

|

9,371

|

6,489

|

112

|

|

Consumer

|

APAC

|

5,757

|

3,166

|

124

|

|

|

CEE

|

7,422

|

4,003

|

84

|

|

|

LATAM

|

2,070

|

721

|

151

|

|

|

MEA

|

1,691

|

795

|

225

|

|

|

NA

|

8,415

|

1,778

|

63

|

|

|

WE

|

9,369

|

2,380

|

72

|

|

Consumer Average

|

|

5,082

|

2,096

|

133

|

Fixed Average

|

|

|

6,674

|

3,726

|

125

|

Mobile

|

Business

|

APAC

|

3,125

|

2,272

|

300

|

|

|

CEE

|

6,091

|

4,075

|

111

|

|

|

LATAM

|

1,566

|

1,079

|

386

|

|

|

MEA

|

1,265

|

807

|

509

|

|

|

NA

|

4,674

|

2,935

|

204

|

|

|

WE

|

5,817

|

4,037

|

127

|

|

Business Average

|

|

3,992

|

2,750

|

251

|

|

Consumer

|

APAC

|

2,159

|

1,025

|

305

|

|

|

CEE

|

3,430

|

1,800

|

200

|

|

|

LATAM

|

1,444

|

609

|

384

|

|

|

MEA

|

1,167

|

490

|

508

|

|

|

NA

|

4,580

|

1,304

|

173

|

|

|

WE

|

4,455

|

1,294

|

159

|

|

Consumer Average

|

|

2,567

|

1,047

|

307

|

Mobile Average

|

|

|

3,005

|

1,571

|

290

|

Global Average

|

|

|

4,987

|

2,735

|

201

|

Source: Ookla Speedtest Data and Cisco Analysis 2011

Table 6. Regional Broadband Penetration (Percentages Indicate Users with Broadband Access Per Region)

Region

|

Fixed Broadband Subscriptions (2011)

|

Mobile Broadband Users (2011)

|

Population

|

Asia Pacific (APAC)

|

217,136,050 (6%)

|

198,471,250 (6%)

|

3,779,499,930

|

Central and Eastern Europe (CEE)

|

41,361,106 (10%)

|

31,987,471 (8%)

|

405,220,643

|

Latin America (LATAM)

|

39,277,443 (7%)

|

39,934,907 (4%)

|

577,978,544

|

Middle East and Africa (MEA)

|

8,638,426 (1%)

|

14,536,458 (2%)

|

1,045,062,933

|

North America (NA)

|

91,882,741 (27%)

|

187,160,230 (54%)

|

344,352,893

|

Western Europe (WE)

|

128,637,208 (26%)

|

116,954,942 (18%)

|

491,668,145

|

Source: ITU, Informa Telecoms and Media 2011

Appendix E: Regional Download and Upload Peak Speeds

Download and upload peak speeds measured (shown in Tables 7 through 9) are the average 95th percentile sample for all countries per region, which represents the highest speed capabilities by region. From an average fixed peak download perspective, Western Europe leads with 64 Mbps and North America follows with 45.1 Mbps.

Table 7. Regional Download and Upload Peak Speeds

Network

|

Segment

|

Region

|

Average of Peak Download

|

Average of Peak Upload

|

Fixed

|

Business

|

APAC

|

45,512

|

144,791

|

|

|

CEE

|

58,698

|

167,676

|

|

|

LATAM

|

15,086

|

55,233

|

|

|

MEA

|

13,310

|

39,232

|

|

|

NA

|

60,923

|

411,957

|

|

|

WE

|

100,371

|

256,335

|

|

Business Total

|

|

50,545

|

149,295

|

|

Consumer

|

APAC

|

22,144

|

102,934

|

|

|

CEE

|

33,530

|

158,827

|

|

|

LATAM

|

7,422

|

36,697

|

|

|

MEA

|

9,187

|

51,013

|

|

|

NA

|

29,343

|

449,401

|

|

|

WE

|

40,158

|

170,471

|

|

Consumer Average

|

|

21,592

|

104,406

|

Fixed Average

|

|

|

32,339

|

121,068

|

Mobile

|

Business

|

APAC

|

11,924

|

30,057

|

|

|

CEE

|

17,956

|

29,277

|

|

|

LATAM

|

5,752

|

35,256

|

|

|

MEA

|

5,438

|

15,420

|

|

|

NA

|

17,795

|

436,817

|

|

|

WE

|

17,366

|

31,193

|

|

Business Average

|

|

12,999

|

43,178

|

|

Consumer

|

APAC

|

8,102

|

52,155

|

|

|

CEE

|

12,113

|

43,488

|

|

|

LATAM

|

5,681

|

30,882

|

|

|

MEA

|

4,570

|

24,670

|

|

|

NA

|

15,714

|

1,271,508

|

|

|

WE

|

14,664

|

667,195

|

|

Consumer Average

|

|

9,144

|

182,056

|

Mobile Average

|

|

|

10,330

|

139,325

|

Grand Average

|

|

|

22,217

|

129,464

|

Table 8. Fixed Speeds by Lead Countries Per Region

Region

|

Average Download (kbps)

|

Average Upload (kbps)

|

Average Latency (ms)

|

APAC

|

Australia

|

11,383

|

5,897

|

65

|

China

|

2,623

|

1,961

|

142

|

India

|

1,252

|

991

|

140

|

Japan

|

23,063

|

15,058

|

42

|

New Zealand

|

7,275

|

3,206

|

66

|

South Korea

|

29,805

|

18,670

|

38

|

CEE

|

Latvia

|

18,420

|

13,428

|

45

|

Lithuania

|

16,261

|

11,479

|

60

|

Russia

|

6,684

|

6,522

|

82

|

LATAM

|

Argentina

|

2,038

|

796

|

83

|

Brazil

|

3,406

|

674

|

100

|

Chile

|

9,624

|

7,836

|

124

|

Mexico

|

2,851

|

729

|

110

|

NA

|

Canada

|

9,137

|

3,645

|

68

|

United States

|

7,831

|

3,085

|

82

|

WE

|

Belgium

|

16,085

|

6,419

|

42

|

France

|

23,458

|

12,925

|

70

|

Germany

|

18,916

|

8,530

|

78

|

Italy

|

6,037

|

3,815

|

75

|

Netherlands

|

23,063

|

11,977

|

51

|

Sweden

|

15,536

|

6,534

|

57

|

United Kingdom

|

10,987

|

4,990

|

76

|

MEA

|

United Arab Emirates

|

6,577

|

2,055

|

63

|

South Africa

|

2,324

|

870

|

112

|

Egypt

|

911

|

338

|

141

|

Table 9. Mobile Speeds by Lead Countries Per Region

Region

|

Average Download (kbps)

|

Average Upload (kbps)

|

Average Latency (ms)

|

APAC

|

Australia

|

3,223

|

1,251

|

225

|

China

|

1,106

|

966

|

505

|

India

|

966

|

952

|

339

|

Japan

|

6,037

|

4,831

|

135

|

New Zealand

|

3,917

|

1,532

|

151

|

South Korea

|

6,708

|

5,368

|

122

|

CEE

|

Latvia

|

5,808

|

3,544

|

155

|

Lithuania

|

4,888

|

2,789

|

152

|

Russia

|

4,913

|

3,994

|

195

|

LATAM

|

Argentina

|

1,433

|

718

|

245

|

Brazil

|

1,931

|

614

|

285

|

Chile

|

4,241

|

3,511

|

251

|

Mexico

|

1,821

|

633

|

250

|

NA

|

Canada

|

5,035

|

2,473

|

172

|

United States

|

4,219

|

1,766

|

205

|

WE

|

Belgium

|

6,379

|

2,495

|

120

|

France

|

4,114

|

2,589

|

154

|

Germany

|

5,989

|

3,804

|

137

|

Italy

|

3,594

|

2,450

|

167

|

Netherlands

|

8,389

|

4,712

|

91

|

Sweden

|

3,948

|

1,887

|

141

|

United Kingdom

|

5,028

|

2,813

|

147

|

MEA

|

United Arab Emirates

|

5,276

|

1,506

|

118

|

South Africa

|

1,607

|

720

|

247

|

Egypt

|

768

|

368

|

332

|